An ongoing challenge to creating robust object detection algorithms is their thirst for large datasets. Thanks to transfer learning, rapid prototypes are possible with as little as 10 images, thanks to weeks of pre-training, and can be trained in as little as one hour!

Of course, to create a more robust algorithm, large datasets are required from a wide range of environments, to help the AI generalise to new contexts.

Annotation (the process of a human labelling the data, and for object detection, labelling where the object is) is a time consuming, slow and expensive process. How might AI speed up this process?

As part of the work on our Search and Rescue use case, I built a pipeline for Active Learning, or Semi-Supervised Learning. This was kicked off from a fantastic workshop with two of Microsoft’s AI experts, Julian Lee and Ananth Prakash. This isn’t a fully autonomous system that can create new data, however it is used to massively speed up the process of building data sets.

First, a small dataset (~200 images) is annotated, and a very rough initial model is created. This model is then applied to ~2000 new images and makes predictions on where it believes the objects are in these new training images. A human then checks over the AI’s predictions, and corrects any mistakes.

Next, the 2,200 images are used to train a better version of the AI. This AI is used to make predictions on ~10,000 images, and the process continues iteratively.

This process allows for the extremely rapid creation of new object detection data sets. My initial tests were able to create about 40GB of data in 5 minutes, which was then passed onto the human data trainer to validate.

Of course, this is not a fully autonomous system, and for good reason! The “human-in-the-loop” is a crucial part of the system, and prevents the AI going off the rails. If the AI makes a couple initial mistakes, and the human doesn’t correct these, these errors will propagate and not result in a good outcome.

Another method for creating automated data sets is using traditional computer vision techniques, such as object tracking to create new annotations. This works by having the human input the initial location for the object of interest, and the application tracks it between frames as it moves. Initial tests using this were through the Visual Object Tagging Tool. This works well, however if the object of interest leaves the frame, and then comes back into view, the tracking process must be reinitiated.

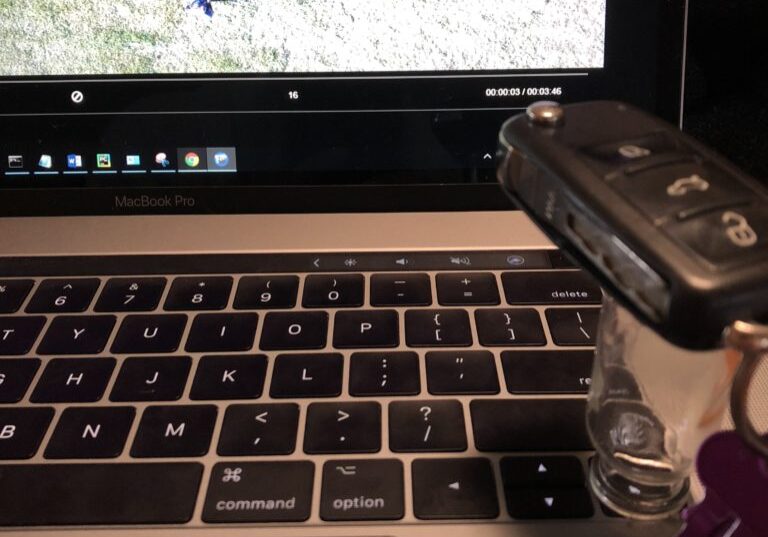

Somtimes the best hacks are low-fi. My "automated" data trainer, using a small vase and the weight of my keys to hold the "right" arrow down, to cycle through all of my data sets and automatically create new training data using object tracking.

This strategy of Active Learning will be a key enabler for my future Proof of Concepts!